[R] Adaptive Rejection Sampling

Adaptive rejection sampling is a statistical algorithm for generating samples from a univariate, log-concave density. Because of the adaptive nature of the algorithm, rejection rates are often very low.

The exposition of this algorithm follows the example given in Davison’s 2008 text, “Statistical Models.”

The Algorithm

The algorithm is fairly simple to describe:

- Establish a set of fixed points and evaluate the log-density, , and derivative of the log-density on the fixed points.

- Use these function evaluations to construct a piecewise-linear, upper bound for the log-density function, , via supporting tangent lines of the log-density at the fixed points.

- Let . Because of the piecewise-linear construction of , is piecewise-exponential, sampling is straightforward.

- Pick . If , accept ; else, draw another sample from .

- For any rejected by the above criteria, may be added to the initial set of fixed points and the piecewise-linear upper bound, , adaptively updated.

The Example

We apply the algorithm to Example 3.22 in Davison. Here we specify a log-concave density function. Note is the density, and is the concave log-density:

where is real valued, is a constant such that the integral of has unit area, , , , and .

R Code

First, define the function, h, and its derivative, dh.

## Davison, Example 3.22

params.r = 2

params.m = 10

params.mu = 0

params.sig2 = 1

## the log of a log-convex density function

ymin = -Inf

ymax = Inf

h = function(y){

v = params.r*y - params.m * log(1+exp(y)) - (y-params.mu)^2/(2*params.sig2) # plus normalizing const

return(v)

}

## derivative of h

dh = function(y)

{

params.r - params.m * exp(y) / (1 + exp(y)) - (y-params.mu)/params.sig2

}Define the function that computes the intersection points of the supporting tangent lines. Suppose denotes the fixed points. Then,

## compute the intersection points of the supporting tangent lines

zfix = function(yfixed)

{

yf0 = head(yfixed, n=-1)

yf1 = tail(yfixed, n=-1)

zfixed = yf0 + (h(yf0) - h(yf1) + (yf1 - yf0)*dh(yf1)) / (dh(yf1) - dh(yf0))

return(zfixed)

}and the piecewise-linear upper bound,

## evalutate the unnormalized, piecewise-linear upper-bound of the log-density

hplus = function(y, yfixed)

{

res = rep(0, length(y))

zfixed = zfix(yfixed)

piecewise.idx = findInterval(y, c(ymin, zfixed, ymax))

npieces = length(zfixed) + 2

for(pidx in 1:npieces){

yp = y[piecewise.idx == pidx]

xx = h(yfixed[pidx]) + (yp - yfixed[pidx])*dh(yfixed[pidx])

res[piecewise.idx == pidx] = xx

}

return(res)

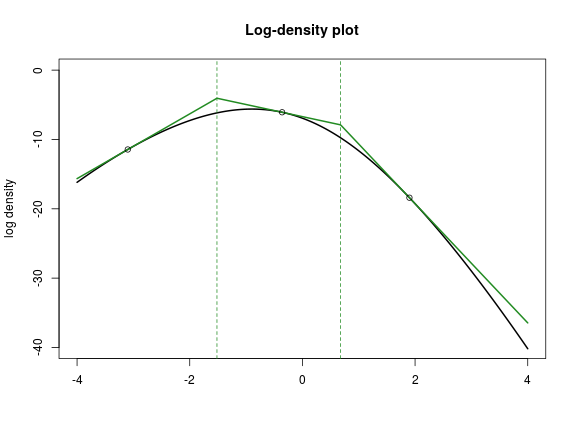

}In the following plot, is shown in black, and is in green. The black circles are , and the dashed green vertical lines are .

We implement a vectorized function to compute the (normalized) CDF of :

In particular, the above formulation means that we can precompute which means it is only necessary to compute the last, non-zero integral for each .

gplus.cdf = function(vals, yfixed)

{

# equivalently: integrate(function(z) exp(hplus(z, yfixed)), lower=-Inf, upper = vals)

zfixed = zfix(yfixed)

zlen = length(zfixed)

pct = numeric(length(vals))

norm.const = 0

for(zi in 0:zlen) {

if(zi == 0)

{

zm = -Inf

} else {

zm = zfixed[zi]

}

if(zi == zlen)

{

zp = Inf

} else {

zp = zfixed[zi+1]

}

yp = yfixed[zi+1]

ds = exp(h(yp))/dh(yp) * ( exp((zp - yp)*dh(yp)) - exp((zm - yp)*dh(yp)) )

cidx = zm < vals & vals <= zp

hidx = vals > zp

pct[cidx] = pct[cidx] + exp(h(yp))/dh(yp) * ( exp((vals[cidx] - yp)*dh(yp)) - exp((zm - yp)*dh(yp)) )

pct[hidx] = pct[hidx] + ds

norm.const = norm.const + ds

}

l = list(

pct = pct / norm.const,

norm.const = norm.const

)

return(l)

}Next, we write a function to sample from . This proceeds via a probability integral transform, inverting realizations from a distribution. Using the previous sum-of-integrals formulation for , this requires a search across and then inverting a single integral.

## sample from the gplus density

gplus.sample = function(samp.size, yfixed)

{

zfixed = zfix(yfixed)

gp = gplus.cdf(zfixed, yfixed)

zpct = gp$pct

norm.const = gp$norm.const

ub = c(0, zpct, 1)

unif.samp = runif(samp.size)

fidx = findInterval(unif.samp, ub)

num.intervals = length(ub) - 1

zlow = c(ymin, zfixed)

res = rep(NaN, length(unif.samp))

for(ii in 1:num.intervals)

{

ui = unif.samp[ fidx == ii ]

if(length(ui) == 0)

{

next

}

## Invert the gplus CDF

yp = yfixed[ii]

zm = zlow[ii]

tmp = (ui - ub[ii]) * dh(yp) * norm.const / exp(h(yp)) + exp( (zm - yp)*dh(yp) )

tmp = yp + log(tmp) / dh(yp)

res[ fidx == ii ] = tmp

}

return(res)

}Results

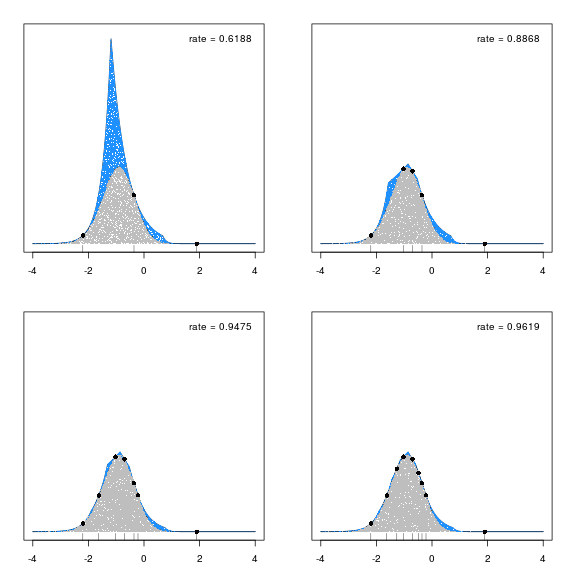

The results are impressive. It takes only a handful of fixed points to reach an acceptance rate that exceed 95%. The figure below shows this convergence. In each plot, 10000 samples are taken from . Blue dots show rejected samples, and gray dots show samples from the target density, . After each experiment, two of the rejected points are chosen as new fixed points. The black dots, and corresponding rug plot, indicate these fixed points. With only 9 fixed points, the acceptance rate is 96%.